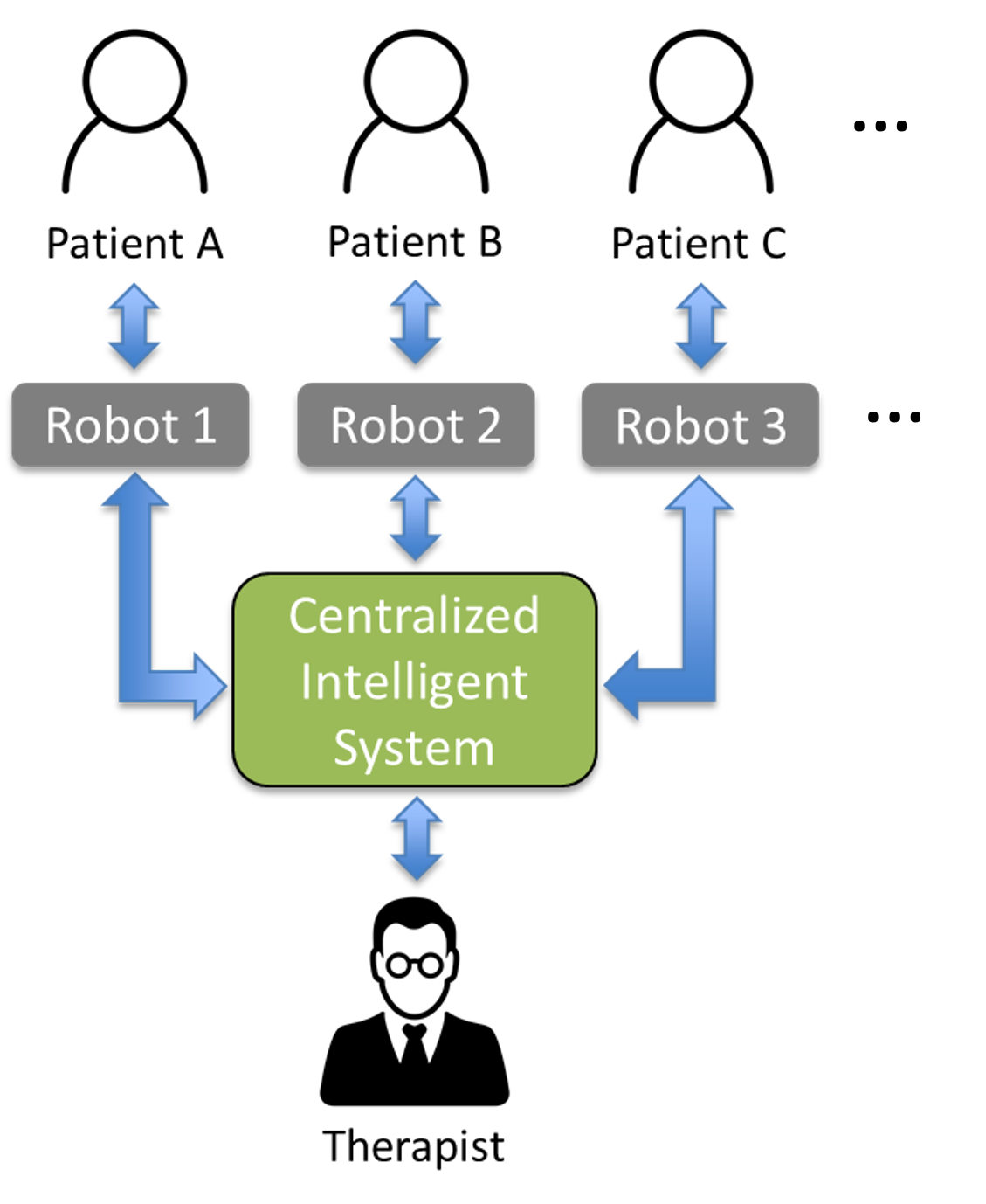

NRI: FND: The Robotic Rehab Gym: Specialized Co-Robot Trainers Working with Multiple Human Trainees for Optimal Learning Outcomes, sponsored by NSF

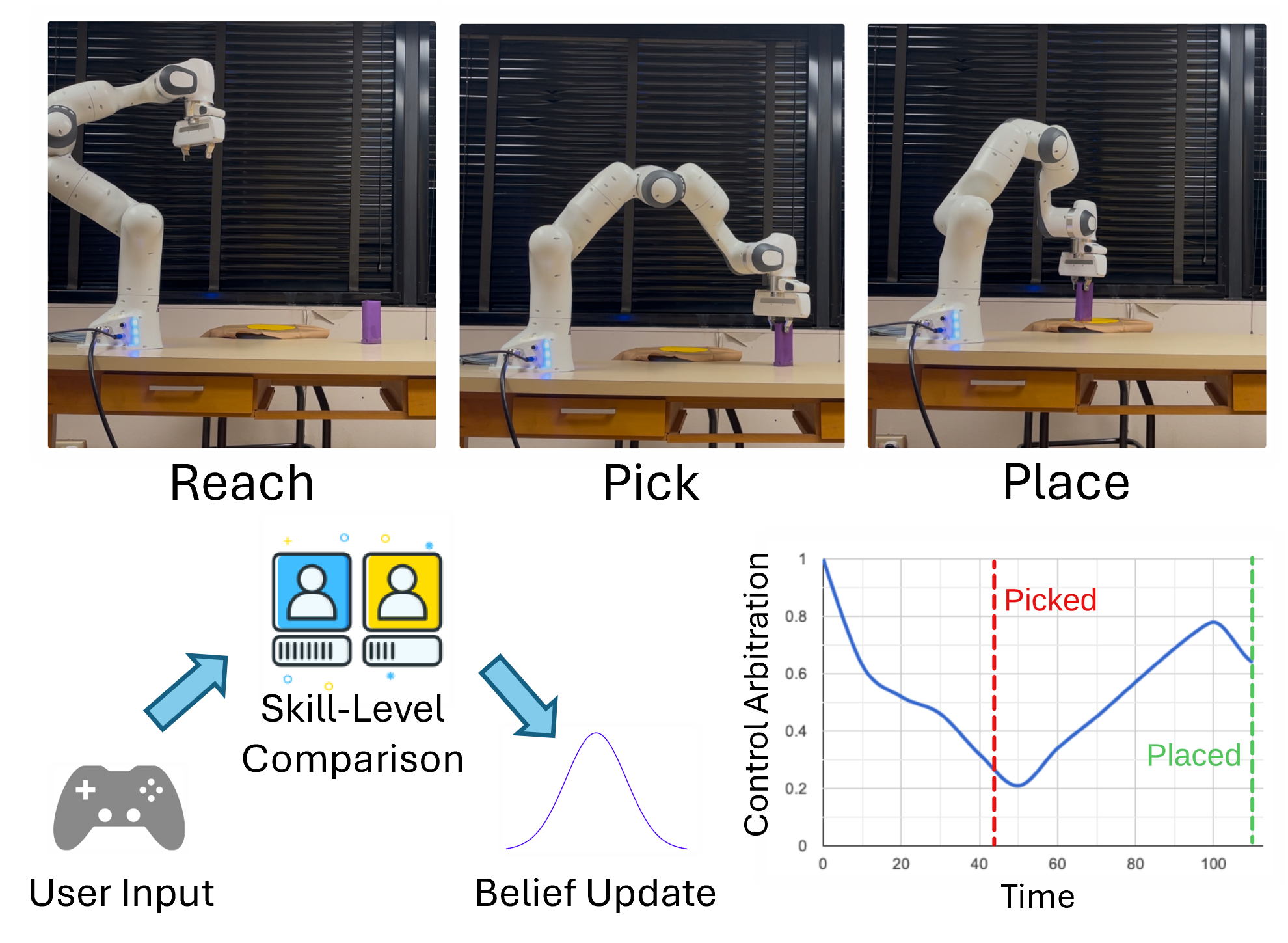

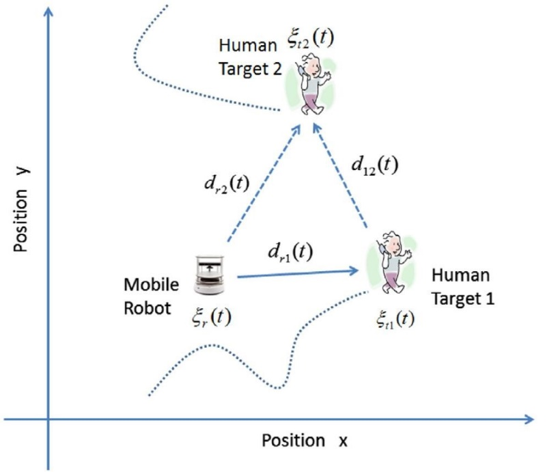

Overview: A robotic rehabilitation gym is defined as multiple patients training with multiple robots or passive sensorized devices in a group setting. Recent work with such gyms has shown positive rehabilitation outcomes; furthermore, such gyms allow a single therapist to supervise more than one patient, increasing cost-effectiveness. To allow more effective multipatient supervision in future robotic rehabilitation gyms, this project investigates automated systems that can dynamically assign patients to different robots within a session in order to optimize group rehabilitation outcome.